Robyn AI companion steps in where loneliness spreads

Jenny Shao never planned on leaving the medical field. She was deep in her Harvard residency when the pandemic hit and cracked something open. She watched people crumble under isolation. She watched loneliness leave marks on the brain. She felt a tug she could not ignore. That tug pushed her to build Robyn, an AI companion designed to offer emotional support without pretending to be a therapist or a stand-in for a friend.

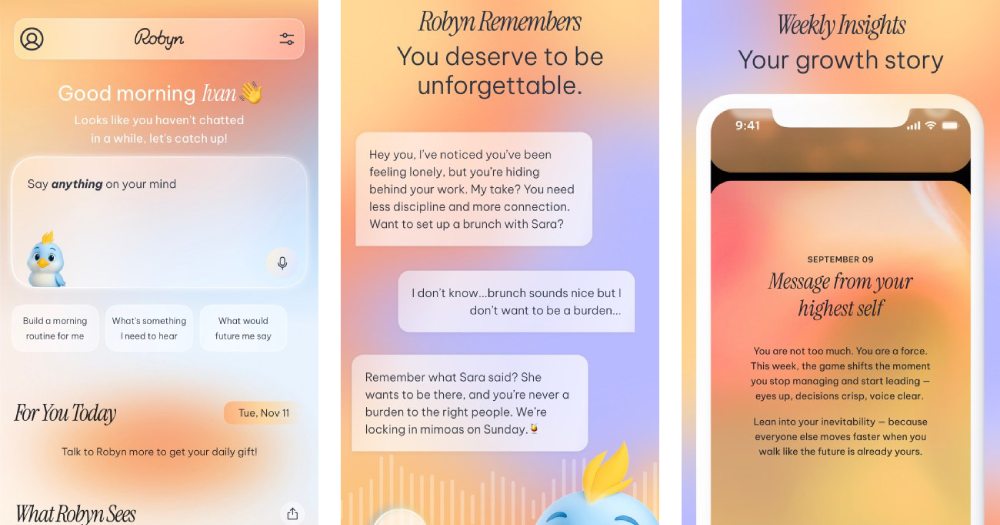

As she puts it, "You can think of Robyn as your emotionally intelligent partner." It is a bold promise in a world full of chatbots that talk a good game but often miss the human pulse.

How does it work?

Robyn begins with a gentle onboarding flow that mirrors many mental wellness apps. It asks who you are, what you want, how you react when life pokes at your soft spots, and what tone makes you feel seen. Then it gets to work.

- It chats about anything from your morning routine to how you handle stress.

- It remembers how you answer.

- It maps your patterns into simple traits like emotional fingerprint, attachment style, and inner critic.

This memory layer is not an accident. Shao once trained in Nobel Laureate Eric Kandel's lab, where she studied how humans store and retrieve memories. She poured that science into Robyn so the assistant behaves less like a blank slate and more like someone who actually knows you.

The app also avoids falling into gimmicks. Ask it to count to one thousand or fetch sports scores, and it will gently remind you that it has a different purpose.

Why does it matter?

We are living in a strange moment. People are surrounded by technology yet feel more disconnected than ever. Investor Lars Rasmussen put it bluntly. "People are surrounded by technology but feel less understood than ever. Robyn tackles that head on."

He believes the app helps people notice their own patterns and reconnect with themselves. That is not therapy. It is not a faux friendship either. It is a small nudge that strengthens someone's capacity to connect, first inward, then outward. With seventy-two percent of U.S. teens having used an AI companion app, the stakes are sky high.

Some apps have already faced lawsuits tied to tragic outcomes. Building something safer, calmer, and grounded in guardrails is not a nice-to-have. It is oxygen.

The context

AI companionship sits in a messy middle ground. On one side are the big general chatbots. On the other hand, are avatar-like apps that flirt with parasocial bonds. Robyn tries to plant itself somewhere saner. "Robyn is and won't ever be a clinical replacement," Shao said. "It is equivalent to someone who knows you very well."

To keep that boundary firm, the app has built-in safety prompts, crisis line links, and hard stops when conversations veer into harmful territory.

As investor Latif Peracha warned, any AI that lives close to the human heart "needs guardrails in place for escalation for situations where people are in real danger."

Robyn has been in testing for months and launches today in the U.S. as a paid service, available for $19.99 per month or $199 per year. The company has grown from three people to ten and secured $5.5 million in seed funding from notable investors such as Rasmussen, Bill Tai, Ken Goldman, and Christian Szegedy.

The mission is clear - build an emotionally intelligent assistant that supports people without pretending to replace the humans they still need.

💡Did you know?

You can take your DHArab experience to the next level with our Premium Membership.👉 Click here to learn more

🛠️Featured tool

Easy-Peasy

Easy-Peasy

An all-in-one AI tool offering the ability to build no-code AI Bots, create articles & social media posts, convert text into natural speech in 40+ languages, create and edit images, generate videos, and more.

👉 Click here to learn more