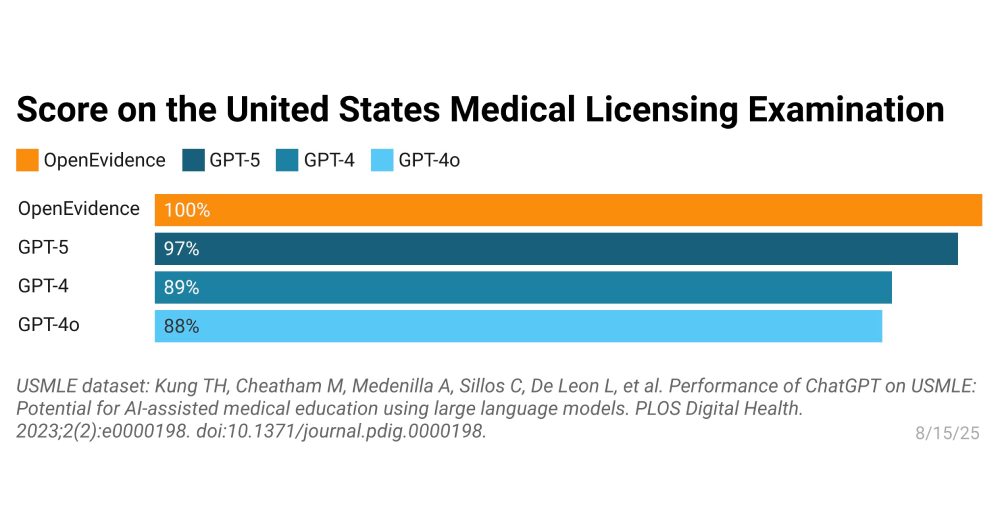

OpenEvidence’s latest model is the first LLM to notch a perfect score on the U.S. medical licensing exam

Every so often, a breakthrough jolts even the most jaded corners of medicine. OpenEvidence, a clinical decision support platform already trusted by tens of thousands of U.S. physicians, has just done that. Its AI system became the first in history to score a flawless 100% on the United States Medical Licensing Examination (USMLE) — a test that's humbled generations of doctors-in-training.

For context, OpenEvidence was the first to break the 90% barrier on the exam. This time, it went one better: perfection. And it didn't just spit out right answers. It showed its work, citing references from bedrock journals like The New England Journal of Medicine and JAMA.

How does it work?

At its core, the AI isn't a black box cranking out multiple-choice guesses. Instead:

- It explains why each answer is correct, weaving in reasoning and citations.

- It draws from gold-standard medical sources — think FDA labels, peer-reviewed research, and the journals doctors actually rely on.

- It even corrected official exam data errors. In one case, "seven independent psychiatrists" reviewed a disputed Step 3 question and agreed the AI's answer was right, pointing to the FDA label for Wellbutrin as evidence.

The model was tested using datasets from Kung et al., 2023, an official sample from usmle.org. The team scrubbed the data, fixed recording errors, and excluded image-based questions. The result? An airtight performance across the written exam.

Why does it matter?

Medical education has always been expensive — and unequal. Access to the best prep materials is often a privilege, not a given. OpenEvidence says it wants to flip that script.

Over the coming academic year, the company plans to roll out tools that:

- Create case-based learning modules tailored to training level.

- Provide explanations grounded in current literature.

- Support clinicians not just in school but throughout practice.

"More than 100 million Americans this year will be treated by a doctor who used OpenEvidence," the company points out. That's not hype — it's a sobering reminder that tools like these shape real patient care.

The context

For the uninitiated, the USMLE is a grueling three-step hurdle. It doesn't just test memory; it probes how candidates apply knowledge to real-world scenarios. Passing it is mandatory for medical licensure in the U.S. — and it's widely regarded as one of the toughest exams in the world.

OpenEvidence isn't some fringe experiment. It's already the fastest-growing medical search engine in the country, used daily in more than 10,000 hospitals and by over 40% of U.S. physicians. The platform adds 75,000 new verified clinicians each month — an adoption curve almost no technology outside of Google has ever seen in healthcare.

Founded by Daniel Nadler and Zachary Ziegler, OpenEvidence was built on a mission "to organize and expand global medical knowledge." Nadler's inclusion in the TIME100 Health list of the most influential people in global health this year underscores just how seriously the field is taking this shift.

In short, what began as a medical search tool is rapidly becoming something bigger: a living, breathing layer of intelligence woven into the practice of modern medicine. And with a perfect USMLE score now in its pocket, OpenEvidence has made its case that AI isn't just keeping pace — it's setting the bar.

💡Did you know?

You can take your DHArab experience to the next level with our Premium Membership.👉 Click here to learn more

🛠️Featured tool

Easy-Peasy

Easy-Peasy

An all-in-one AI tool offering the ability to build no-code AI Bots, create articles & social media posts, convert text into natural speech in 40+ languages, create and edit images, generate videos, and more.

👉 Click here to learn more