AI model uses facial expressions to predict patient decline with near-perfect accuracy

It is a known fact that early detection of patient deterioration is critical for improving outcomes and saving lives. A recent study, published in the journal "Informatics," has taken a step forward by utilizing artificial intelligence (AI) to predict patient decline with near-perfect accuracy — by analyzing facial expressions.

This innovative approach could revolutionize how healthcare providers monitor and respond to patient conditions in clinical settings.

How does it work?

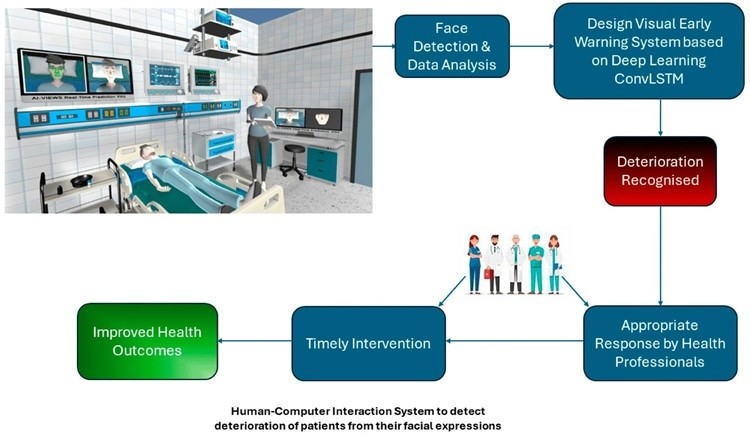

The researchers developed a Convolutional Long Short-Term Memory (ConvLSTM) model that merges the capabilities of convolutional neural networks (CNNs) and long short-term memory (LSTM) cells. This combination enables the model to capture both the spatial features of facial expressions and their temporal dynamics, allowing it to accurately predict patient deterioration over time.

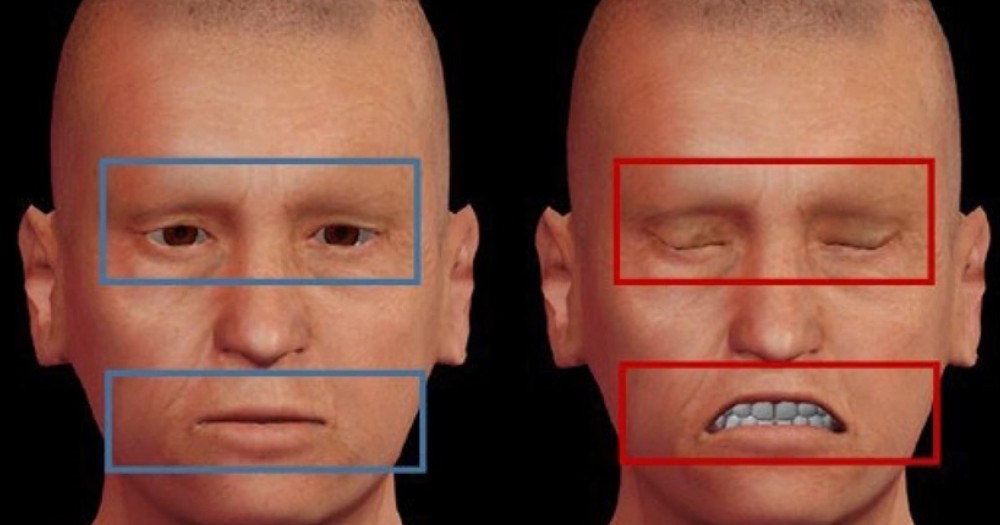

The process began by creating a dataset of 3D animated avatars that mimicked real human faces, representing various age groups, ethnicities, and facial features. These avatars were programmed to display specific facial expressions related to health decline, resulting in a comprehensive dataset of video clips. The dataset underwent rigorous pre-processing, including face detection and balancing techniques, to ensure accurate training and testing of the model.

The ConvLSTM model was then trained on this dataset to recognize five key facial expression classes associated with patient deterioration. The model's performance was evaluated using multiple metrics, including accuracy, precision, and recall, all of which demonstrated the model's exceptional ability to predict patient decline with an accuracy rate of 99.89%.

Why does it matter?

The implications of this research are profound. Facial expressions are powerful indicators of a person's emotional and physical state. By leveraging AI to analyze these expressions, healthcare providers can potentially identify critical health issues before they become life-threatening. As the study's authors note, "Accurate recognition of emotions like pain, sadness, and fear can help in the early detection of patient deterioration, improving care and outcomes."

This AI-driven approach could transform patient monitoring in hospitals, especially in intensive care units where rapid response is crucial. The high accuracy of the ConvLSTM model suggests that it could be a reliable tool in the early detection of health risks, leading to more timely and effective interventions.

The context

The study builds on a long history of research into facial expressions as a window into human emotions and health. From Charles Darwin's early work on the universality of facial movements to the development of the Facial Action Coding System (FACS) by Ekman and Friesen, the study of facial expressions has evolved significantly. Today, it intersects with advanced fields like computer vision and machine learning, opening new avenues for applications in healthcare.

However, the study also highlights a significant limitation — the reliance on synthetic data. Ethical concerns prevented the collection of real patient data, particularly in critical care settings, which raises questions about the model's performance in real-world scenarios. The authors acknowledge this gap and emphasize the need for future research using real patient data to validate their findings.

In conclusion, this study represents a promising step forward in using AI to enhance patient care. While further validation is needed, particularly with real-world data, the ConvLSTM model's ability to predict patient decline with such high accuracy suggests a future where AI plays a central role in safeguarding patient health.

💡Did you know?

You can take your DHArab experience to the next level with our Premium Membership.👉 Click here to learn more

🛠️Featured tool

Easy-Peasy

Easy-Peasy

An all-in-one AI tool offering the ability to build no-code AI Bots, create articles & social media posts, convert text into natural speech in 40+ languages, create and edit images, generate videos, and more.

👉 Click here to learn more