Yale study uncovers racial bias in ChatGPT’s radiology reports

A recent study conducted by Yale and published in the journal Clinical Imaging has brought to light concerns regarding potential racial bias when Open AI's GPT-3.5 and GPT-4.0 are provided with patients' race.

The study highlights the implications of including racial information in AI-driven healthcare applications, raising questions about how this data influences the AI's output and the broader impact on patient care.

How did it work?

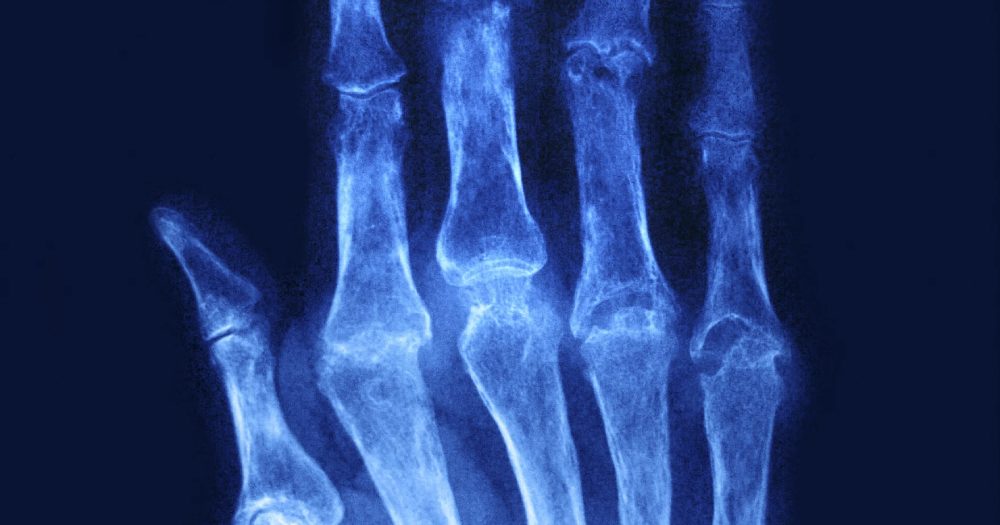

Researchers at Yale set out to evaluate the performance of Open AI's ChatGPT in simplifying medical language for patients. They fed ChatGPT more than 700 radiology reports, asking it to translate complex medical terms into layman's terms. For example, when a radiology report mentioned "Kerley B lines," ChatGPT simplified this to indicate the presence of excess fluid in the lungs.

However, the study took a significant turn when researchers also provided the AI with the race of the person for whom the medical information was being simplified. They found that the reading grade levels of the simplified reports varied depending on the race specified.

According to Dr. Melissa Davis, co-author of the study and vice chair of Medical Informatics at Yale, "We found that white and Asian patients typically had a higher reading grade level. If I said 'I am a Black patient,' or 'I am an American Indian patient' or 'Alaskan Native patient,' the reading grade levels would actually drop."

Why does it matter?

This finding raises important ethical considerations in the deployment of AI in healthcare. The simplification of medical information to lower reading levels based on race could lead to miscommunication and unequal treatment of patients.

Dr. Davis points out that while ChatGPT excelled in simplifying reports, the inclusion of patients' race as a socio-economic determinant should be reconsidered. Instead, factors like education level or age might be more appropriate metrics for tailoring medical communication.

The context

The use of AI in healthcare is rapidly expanding, both in Connecticut and across the nation. For instance, Hartford HealthCare has recently established the Center for AI Innovation in Healthcare, partnering with the Massachusetts Institute of Technology and Oxford University.

"AI stands poised to profoundly reshape healthcare delivery, impacting access, affordability, equity, and excellence," said Dr. Barry Stein, the center's chief clinical innovation officer.

Moreover, researchers at UConn Health are leveraging AI to enhance early diagnosis of lung cancer. Dr. Omar Ibrahim, associate professor of medicine and director of interventional pulmonology at UConn Health, emphasizes the potential of AI in shifting the stage of cancer diagnosis.

"Using the AI technology of Virtual Nodule Clinic, I can find the patients who need care, before they even realize it themselves, and get them treated at the earliest possible stage," he explains.

Elsewhere, we reported about Moderna's expanded partnership with OpenAI to advance mRNA medicine.

What's next?

The Yale study underscores the need for careful consideration of the socio-economic factors included in AI training data. As AI continues to advance in the medical field, ensuring that it operates without bias and supports equitable patient care will be crucial.

The findings from this study serve as a reminder of the complexities involved in integrating AI into healthcare and the ongoing need for vigilance and ethical oversight.

💡Did you know?

You can take your DHArab experience to the next level with our Premium Membership.👉 Click here to learn more

🛠️Featured tool

Easy-Peasy

Easy-Peasy

An all-in-one AI tool offering the ability to build no-code AI Bots, create articles & social media posts, convert text into natural speech in 40+ languages, create and edit images, generate videos, and more.

👉 Click here to learn more